In the above visualization, each colored dot represents a Twitter account in the QAnon network. The accounts that are inactive as of January 21st are highlighted in yellow.

Impact of Twitter’s Recent QAnon Takedown

Analysis of a QAnon network map produced by Graphika indicates that more than 60% of these Twitter accounts are no longer online. This network represents a set of 13,856 highly connected accounts that were engaging with QAnon hashtags last spring; 8,859 of which are now inactive. While some of these accounts are likely to have been deactivated by the users themselves, or suspended in previous enforcement actions, we believe that the vast majority of these accounts were removed in the most recent round of Twitter suspensions announced on January 12th.

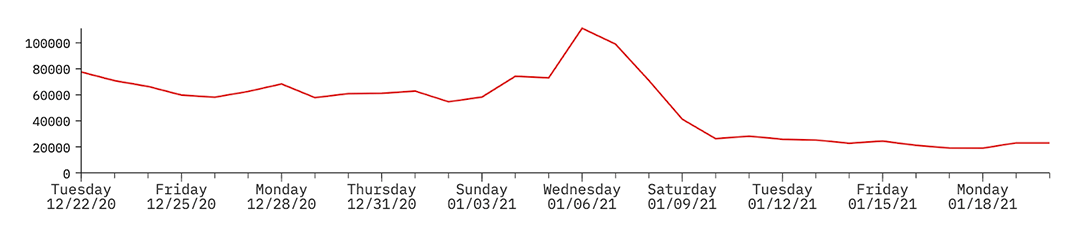

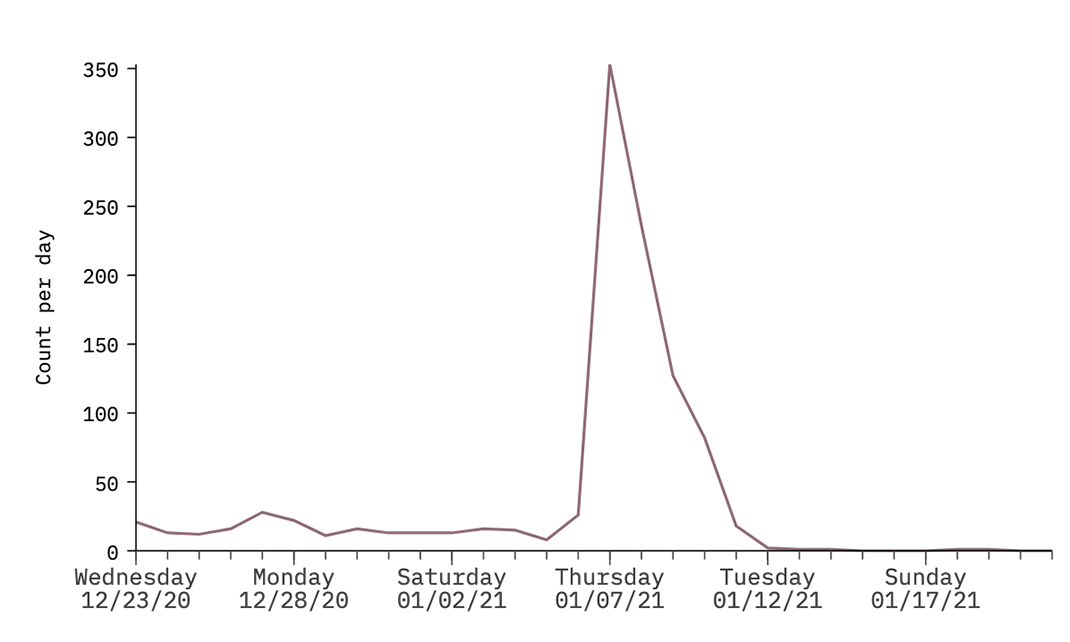

The timeline below, which plots the volume of tweets produced by QAnon users in this network, shows a clear spike on January 6th—the day of the riots at the US Capitol Building in Washington, DC—before a rapid decrease in activity in the following days. There is a notable decline in the two days preceding the announcement from Twitter that it had removed more than 70,000 accounts sharing QAnon content on its platform. In that time, we saw the amount of content being produced by the most highly connected QAnon accounts on Twitter decrease by between 70-80%.

The graph above indicates tweet volume over time for the core QAnon community in Graphika’s network map; activity decreases significantly between January 6th and 10th.

This latest wave of suspensions appears to have affected all of the online communities that comprise this QAnon Twitter network. In addition to the most dedicated adherents of the QAnon community, Trump support accounts that engaged with QAnon content and Japanese QAnon users were also greatly impacted. For example, 45% of the group in Graphika’s QAnon network map that are based in Japan are now inactive. This compares with 62% of the core US QAnon conspiracy community.

Preliminary analysis suggests that many of the accounts removed served as influential bridges between the different sub-groups that compose the larger QAnon community. Twitter announced that it had removed highly influential QAnon accounts, including those of Michael Flynn, former Trump attorney Sidney Powell, and former 8kun administrator Ron Watkins. The removed accounts appear to have been highly interconnected, suggesting they were well integrated in the online activity.

Given the prominent and “bridging” role played by these accounts in the overall network, the recent suspensions have had the knock-on effect of decreasing the number of connections between Twitter users. This has resulted in a less dense online network that comprises a collection of isolated splinter communities. Their removal is likely to have a significant impact on the social cohesion and future coordination efforts of the QAnon community on Twitter.

There are 4,997 accounts from this network that remain active. Those with the highest number of in-map followers are typically Twitter accounts that produce a high volume of content centered around support for Donald Trump and tend to steer clear of using QAnon terminology or identifiers. These accounts have been preoccupied in recent days with lamenting the end of the Trump presidency and sharing articles from right-wing news outlets speculating on his next political move.

QAnon’s Role in the Covid-19 Anti-Vaxx Network

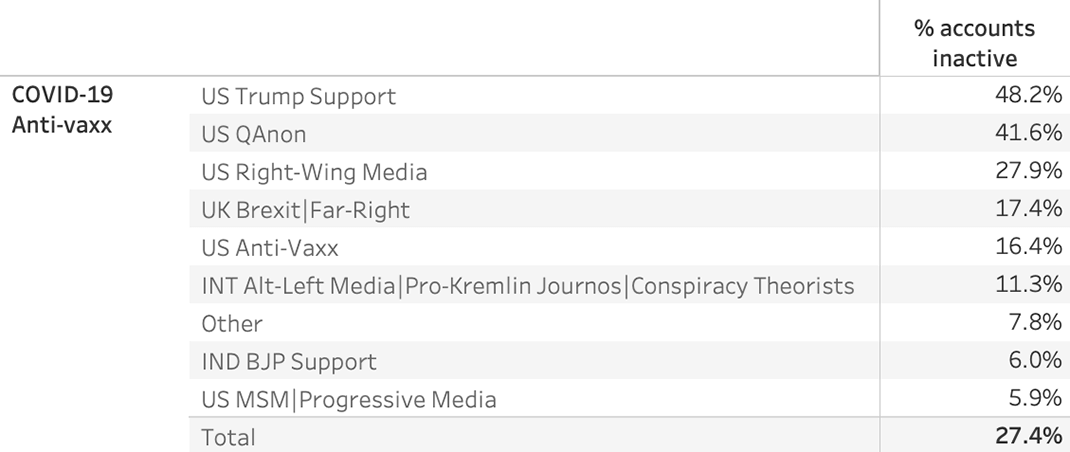

The impact of Twitter’s deplatforming action can also be measured by assessing QAnon’s role in spreading adjacent conspiracy theories. For example, in a network map of anti-vaxxers opposing the Covid-19 vaccine on Twitter, a group of QAnon supporters was central. While QAnon accounts made up just 17% of the overall network, the group was acting as a crucial bridge between accounts that supported Donald Trump and the core of the anti-vaxx movement in the US.

Since this recent enforcement action from Twitter, close to 50% of the QAnon accounts participating in the Covid-19 anti-vaxx conversation have been deactivated. As a result, the suspended accounts are no longer able to act as an information vector for Covid-19 vaccine conspiracies, such as the Bill Gates ‘global depopulation’ or ‘Plandemic’ theories, to peripheral groups.

Table showing the suspension rate across different groups involved in the Covid-19 anti-vaccine network map.

How This Compares with Previous Enforcement

Graphika has conducted similar impact assessments at regular intervals following enforcement actions against QAnon by major social media platforms. In October of last year, following adjustments to platform policies from Facebook and YouTube, we observed that previous takedowns carried out during the summer did not appear to have had significant consequences on the ability of the QAnon community to produce and consume conspiratorial content. As many researchers and journalists have noted, enforcement of these policy changes was patchy, particularly for non-English content. This analysis found that for the same core QAnon network as is mentioned above, between 85-95% of the accounts were still active in October after Twitter announcing a ‘crackdown’ in July.

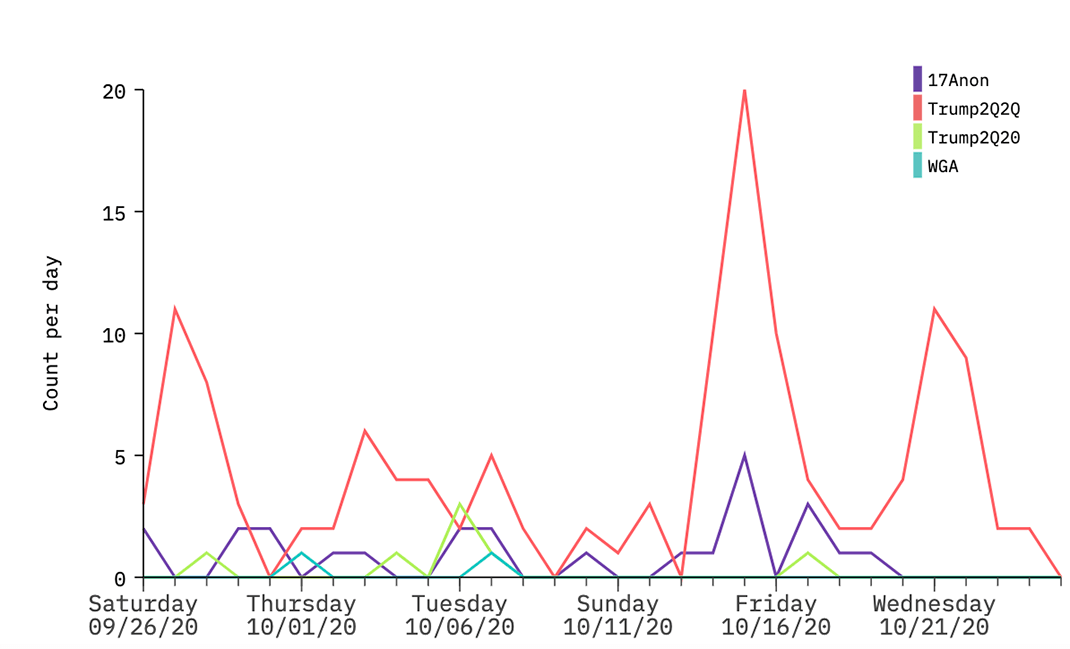

One particular point of interest following previous enforcement activity was what it means for how QAnon operates as an online community. Graphika noted two main observations. The first was that many QAnon accounts exhibited a shift in behaviors, adapting their activity to avoid suspension. QAnon supporters typically shifted away from using their ‘trademark’ online signifiers, however the extent of this response was dependent on the specifics of each platform. On Twitter, this included adapting known QAnon hashtags that are generally used in bios, usernames, and account descriptions, predominantly by trading numbers and letters (shown below). In terms of narratives, there has been a broad effort to soften QAnon rhetoric, for example by reframing raising awareness of ‘satanic child sex trafficking rings operating at the highest levels of US government’ into a focus on protecting the rights of children, which has a more mainstream audience. Platforms have typically been slow to respond to these behavioral shifts.

{: width="1070" height="649"}

{: width="1070" height="649"}

Graph showing the use of altered QAnon hashtags over a 30-day period in October during which there were various platform ‘crackdowns’ on QAnon.

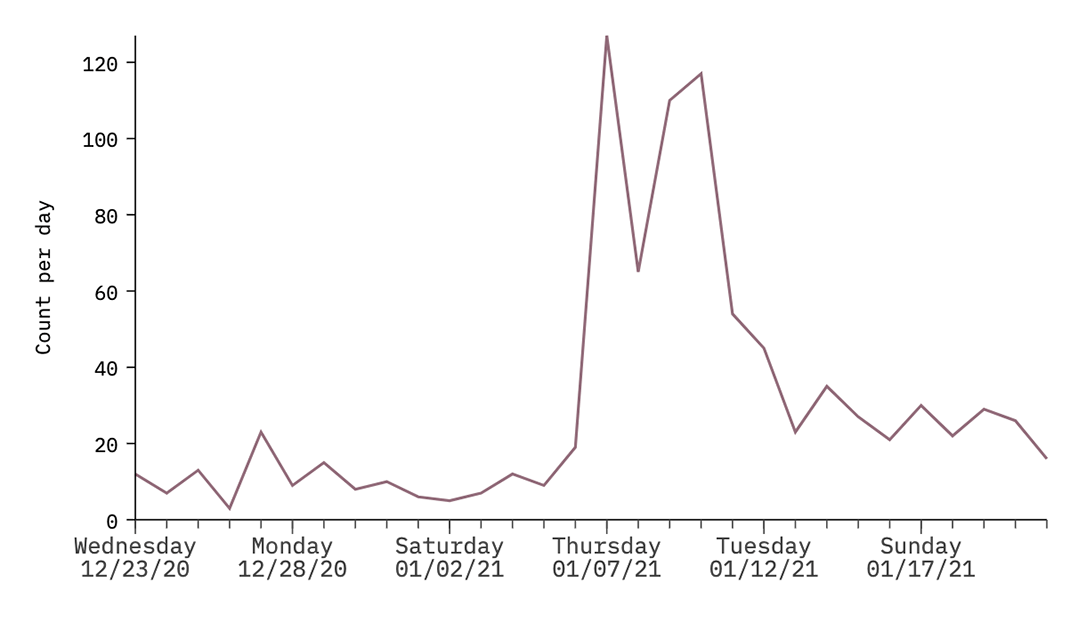

The second observation is common among communities that experience large-scale removal from mainstream social media platforms. Following the recent action taken by Twitter and other policy changes made by Facebook and YouTube in recent months, many of the accounts that remain online have advocated for the migration of the QAnon community to alt-tech platforms such as Parler, Gab, Clouthub, Telegram, and MeWe. As shown below, there was a sharp uptick in the sharing of the Parler and Gab domains by QAnon accounts on January 6th, the day of the Capitol Hill riots.

{: width="1070" height="642"}

{: width="1070" height="642"} {: width="1070" height="623"}Tweets by US QAnon users containing links to parler.com (above) and gab.com (below); both indicate a rapid increase on January 6th.

{: width="1070" height="623"}Tweets by US QAnon users containing links to parler.com (above) and gab.com (below); both indicate a rapid increase on January 6th.